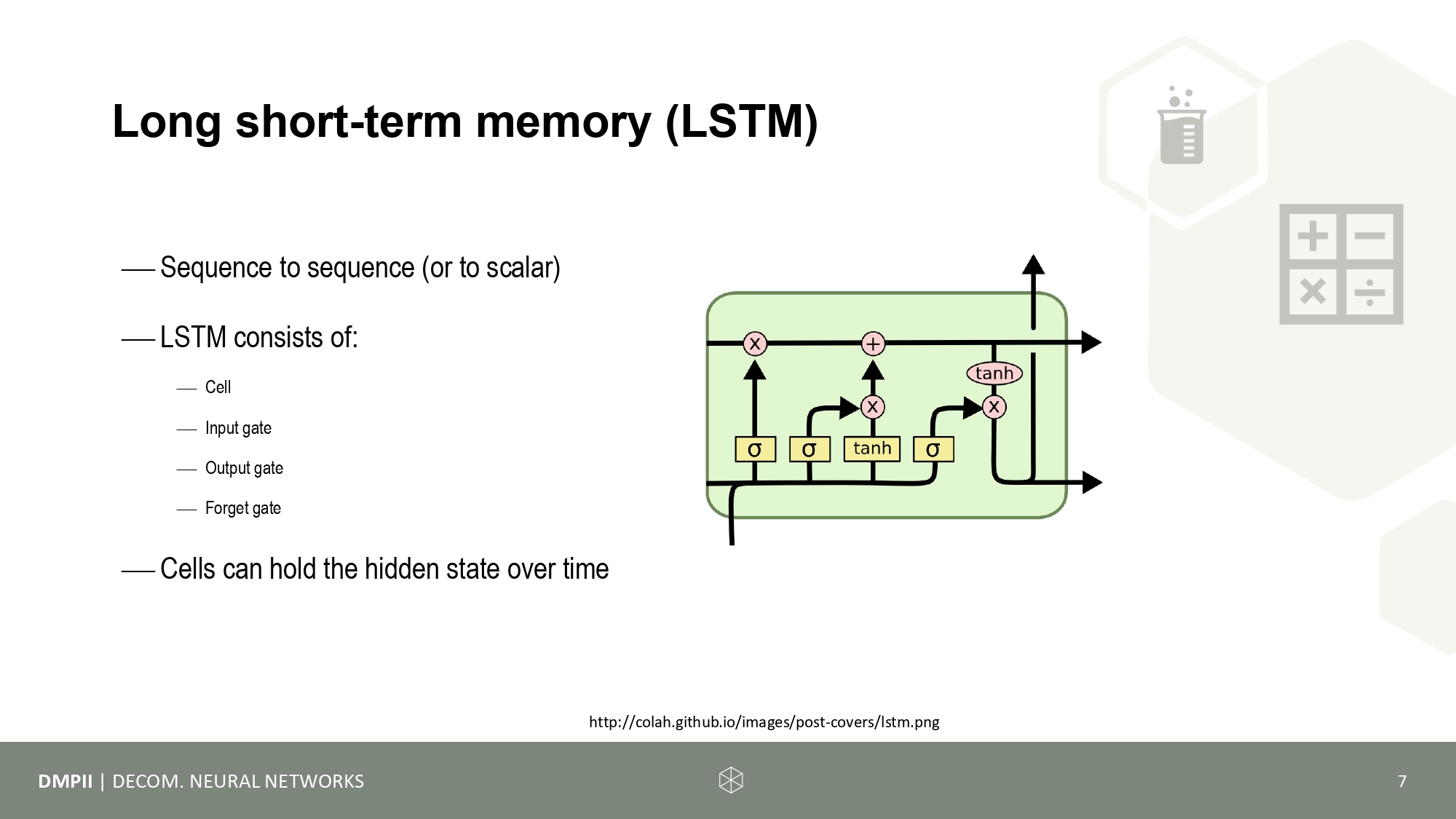

The fifth session addresses to topic of the variety of architectures in machine learning. We will distinguish them by types of layers, common design strategies und common/uncommon modifications to the design or the layers. Concepts like the feed-forward network, convolutional networks or recurrent neural networks are explained and discussed. Modification like attention and transformers, which were recently widely used in models such as chat-gpt will of course not be left out.

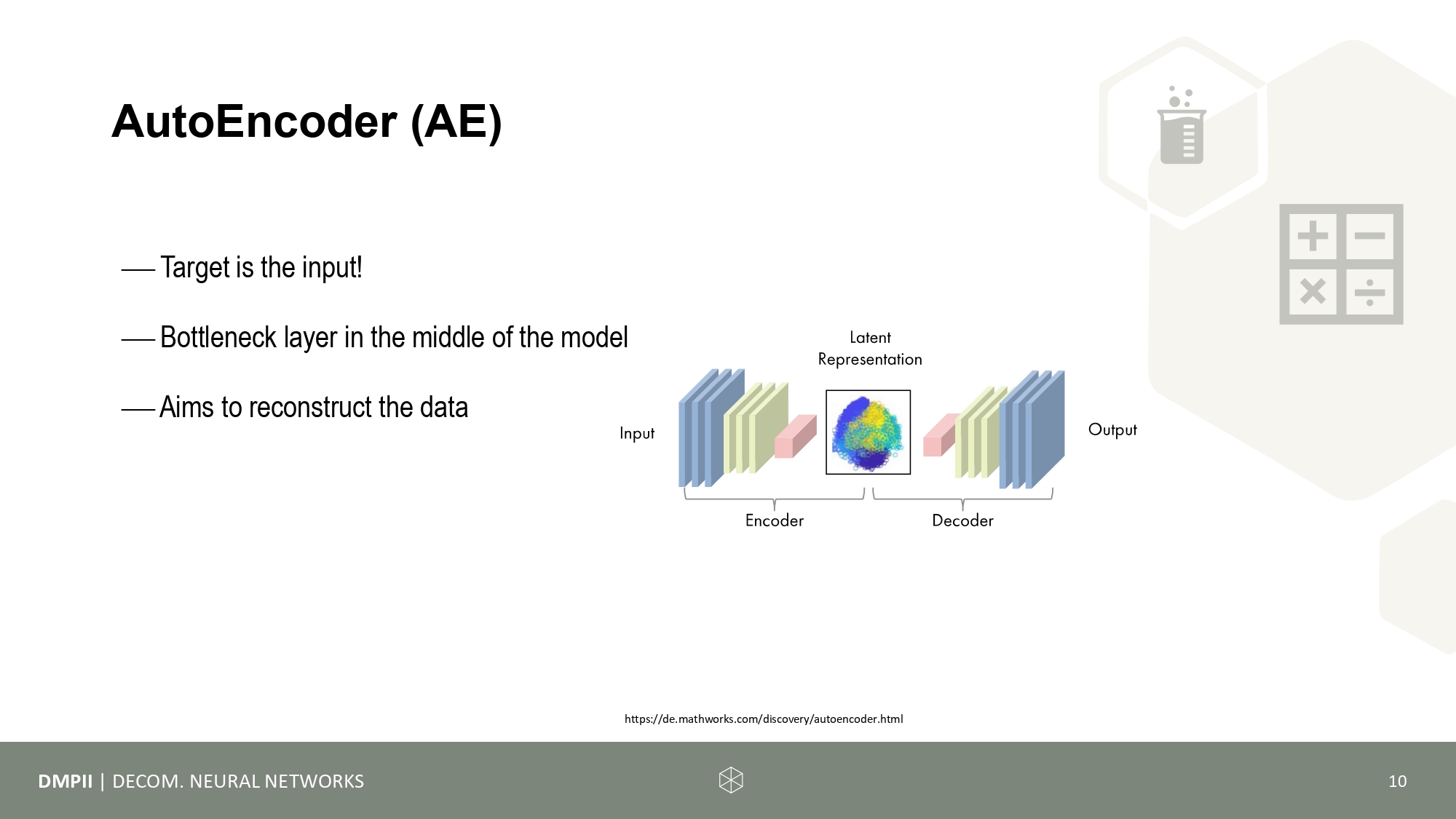

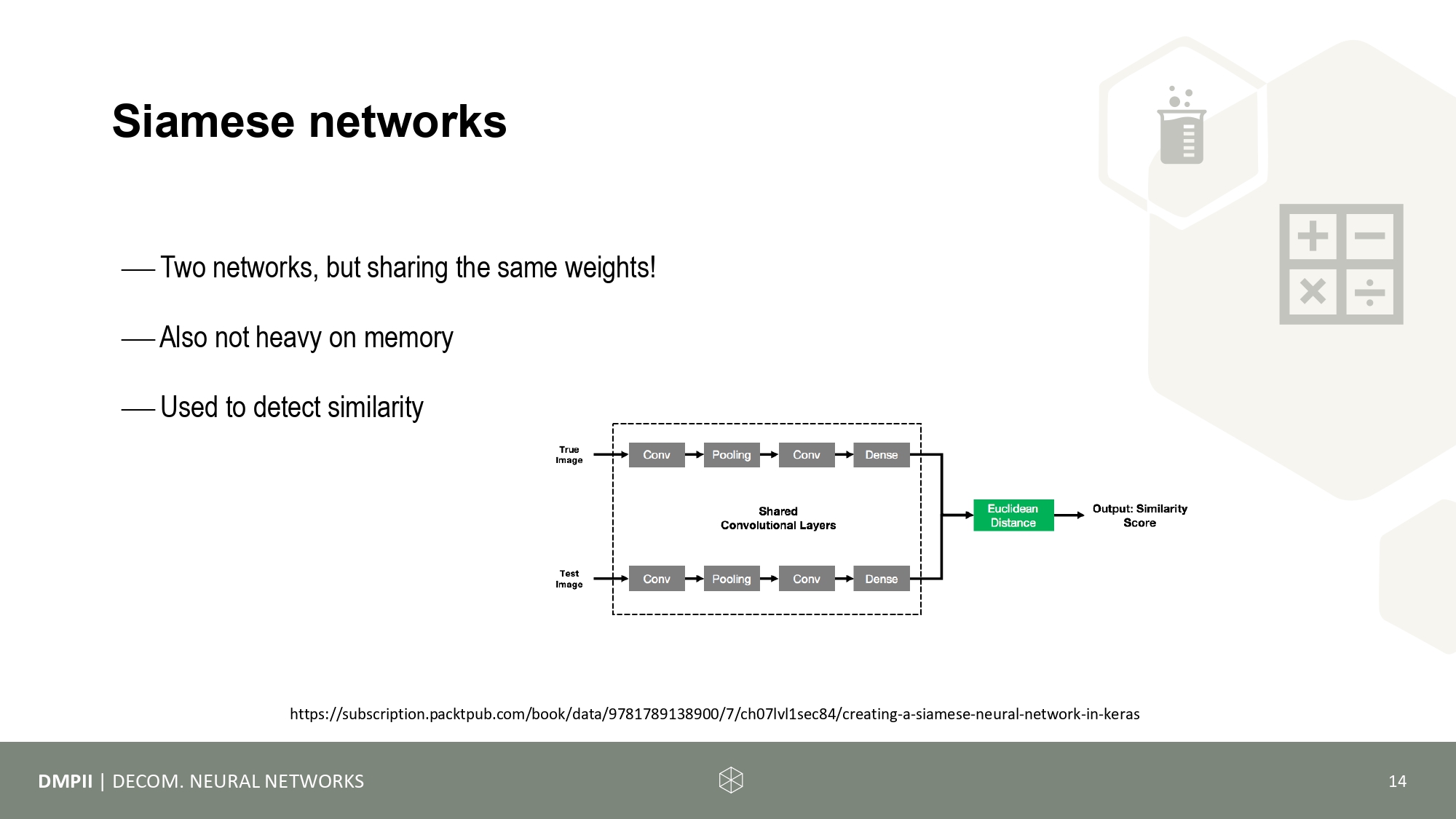

We further show you common architectures like (variational) auto encoders, generative adversarial networks or siamese networks. Together we will discuss which tasks can be solved with them.

- Read this blog article about different architectures. If questions arise during reading, please note them down for the session.

- Write down one or two ideas (preferably regarding your project) and which architecture to solve it with. If the solving try doesn't work completely, don't worry, we do that together.