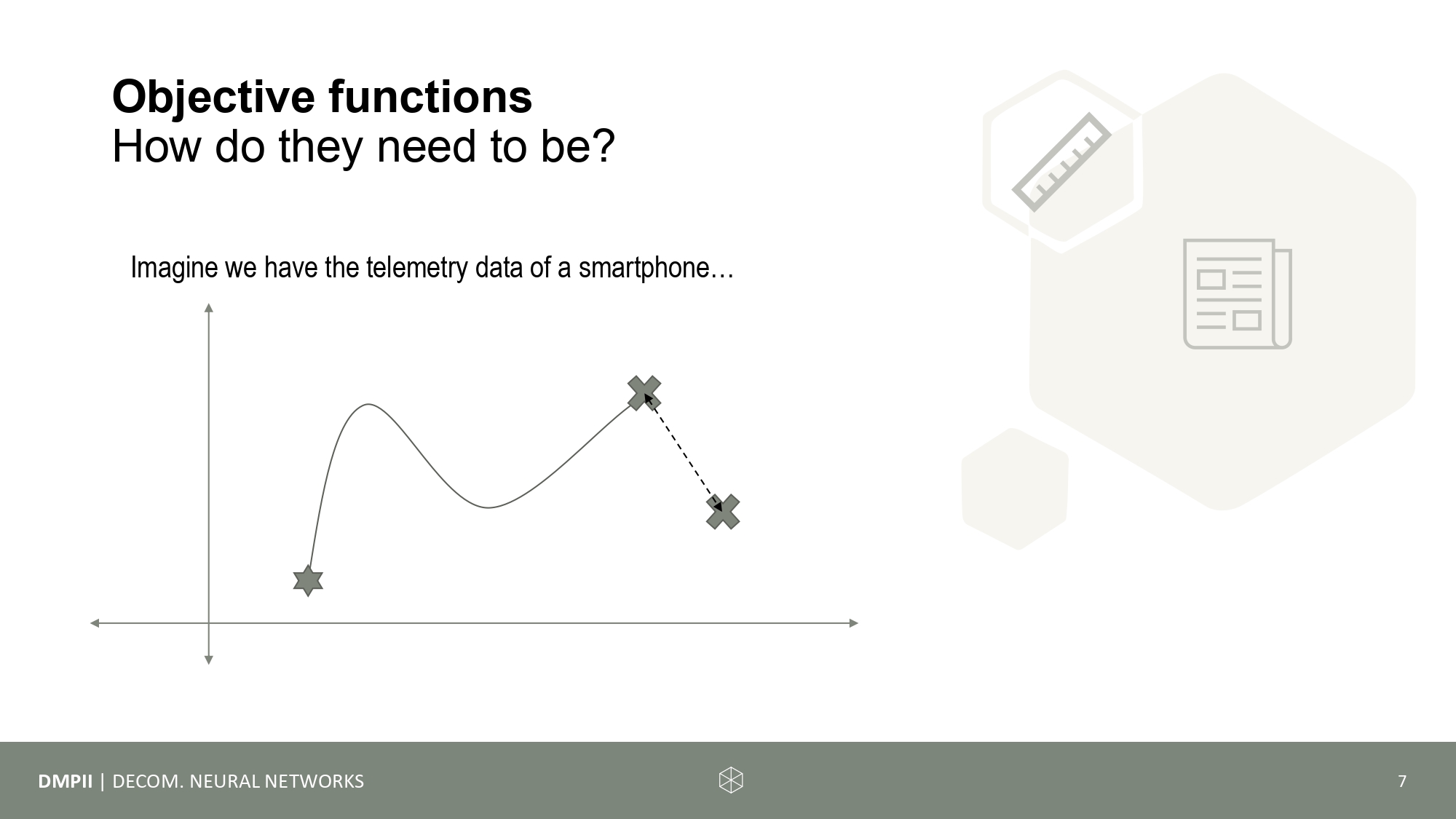

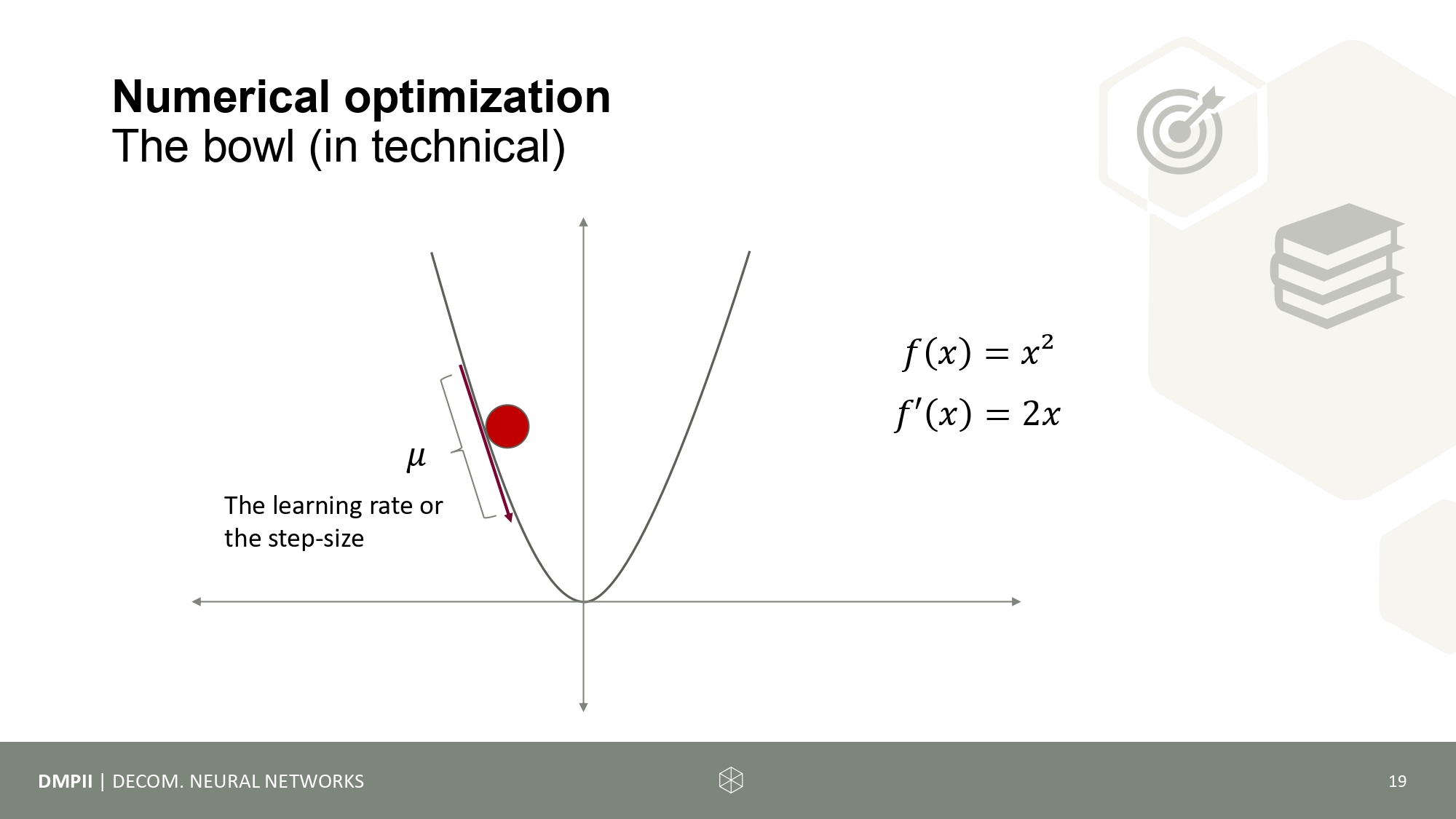

The fourth session will address the question "How is a neural network trained on data? " in depth. The goal of this session is to understand the learning process of a neural network. We will therefore have a closer look on numerical optimization and how to make "learning goals" quantifiable. Different objective functions are investigated and distance metrics are discussed. Further, we will introduce you to the method of backpropagation.

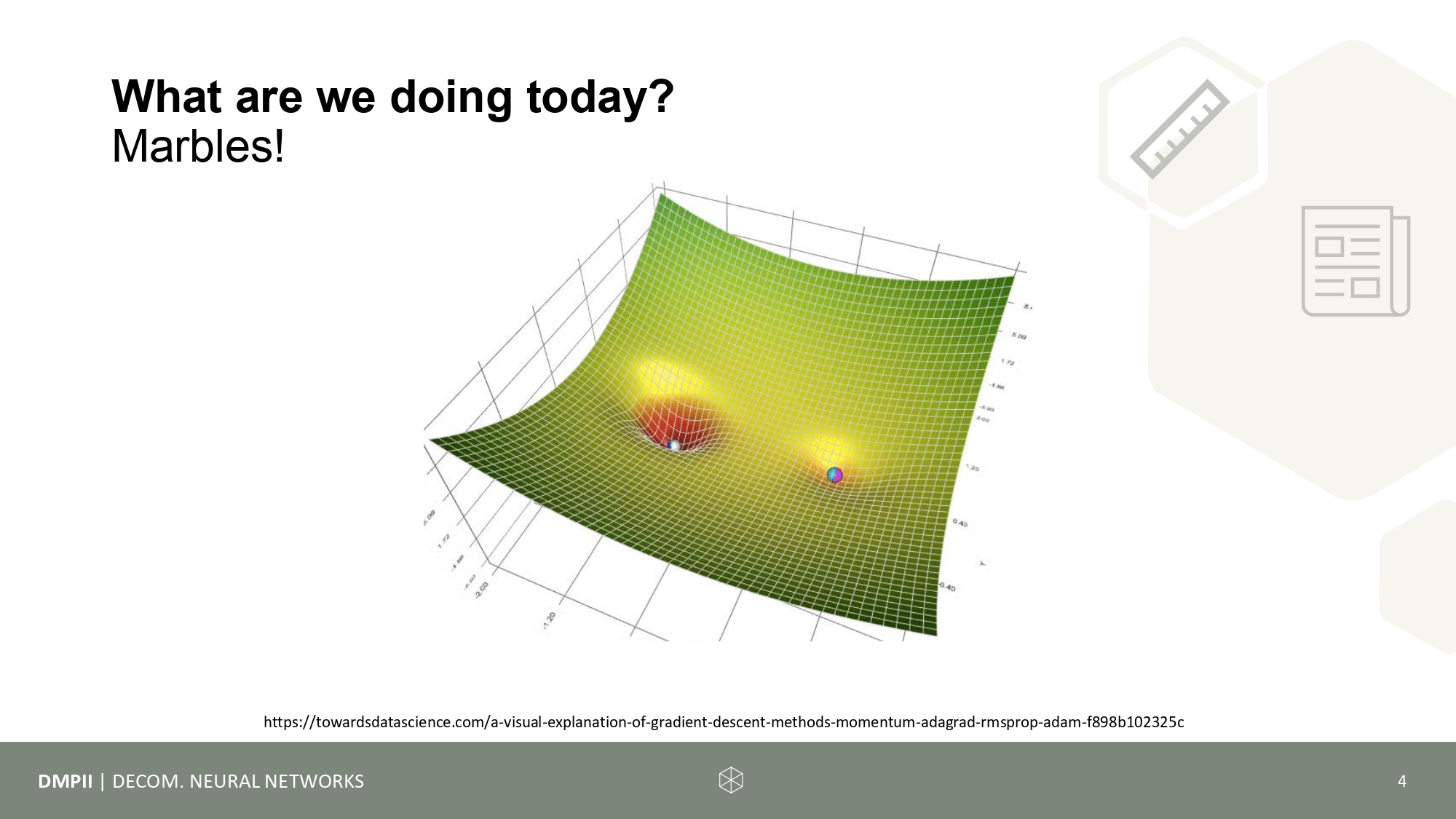

To train a network, we have to SEE what is going on. Together we will plot the loss during training and go through different scenarios. What do we do when the loss is no longer getting smaller? How to get out of a potential local minima?

- Warning! - Reading about optimization beforehand is on your own danger. Yes, it contains some math, but it is much simpler than it looks like :)

- Plot the loss during different training runs.

- Which influence have the single hyperparameter changes on the loss?

- How do different Optimizers effect the training?

- Watch the 3Blue1Brown video about the math of backpropagation. Feel free to ask anything about it next time!

- Search for the concept of momentum in optimizers. How does it work?